Chunking System Logs for AI Analysis

System logs are a critical component of IT operations and security management, capturing details about system performance, errors, and security incidents. The volume of log data generated by modern IT systems can be overwhelming, making it challenging to extract meaningful insights promptly. Artificial Intelligence (AI) can play a pivotal role in analyzing this data, but the unstructured and verbose nature of logs poses a unique challenge. Chunking, the process of breaking down large text data into manageable pieces, is a technique that can help prepare system logs for AI analysis. In this article, we’ll explore the concept of chunking system logs and provide code snippets to illustrate how this can be implemented.

Why Chunk Logs?

Chunking transforms large continuous logs into smaller, more digestible segments that an AI model can easily process. This method helps avoid memory issues and enables parallel processing, thus speeding up analysis. By focusing on chunks that contain full events or transactions, AI models can maintain the context necessary for accurate interpretation and correlation of log entries.

Chunking Strategies

There are several strategies for chunking logs, including time-based chunking, event-based chunking, and size-based chunking. Time-based chunking splits logs by specific time intervals, such as an hour or a day. Event-based chunking divides logs based on certain events or log entries, such as session start and end markers. Size-based chunking breaks the logs into fixed-size segments, ensuring that each chunk is small enough to be handled efficiently by the AI system.

Log Parsing

To ensure your LLM understands understands your data in the log lines, we can parse and provide the keys with the data in the prompt. Alternatively, we can simply provide the GROK pattern in the prompt above the log lines. A GROK pattern typically consists of predefined patterns that can match common text structures like dates, IP addresses, file paths, and more. You can also create custom patterns to match specific text formats. GROK patterns wrap the regular expressions in a way that allows them to be named, so that the matched text portions can be easily captured into fields.

Here’s a simple example of how a GROK pattern might look for parsing a typical log file entry:

%{TIMESTAMP_ISO8601:timestamp} %{LOGLEVEL:loglevel} %{IP:clientip} %{GREEDYDATA:message}Each of the %{PATTERN:fieldname} is a GROK pattern where:

- TIMESTAMP_ISO8601, LOGLEVEL, and IP are the names of predefined patterns for matching timestamps, log levels, and IP addresses, respectively.

- fieldname is the name of the field into which the matched data will be placed. For example, the timestamp of the log entry will be placed in a field called timestamp.

- GREEDYDATA is a special GROK pattern that matches any text, similar to .* in regular expressions, and it’s used here to match the rest of the log message.

This GROK pattern would parse a log entry into structured fields, thus simplifying the process of log analysis.

To effectively use GROK patterns, you typically need to have access to a GROK processor, like the one provided in Logstash, or use a GROK library in a programming language, such as the grok gem in Ruby or pygrok in Python.

Practical Implementation

For illustration purposes, let’s consider an example where we have a large system log file that we want to chunk by size, specifically into chunks of approximately 100 lines each. Note that while the sizes and techniques can be adjusted, this example provides a framework for implementing a basic chunking operation.

Here’s a Python code snippet to achieve size-based chunking:

def chunk_log_file(log_file_path, lines_per_chunk=100):

chunk_number = 1

current_chunk = []

with open(log_file_path, 'r') as log_file:

for line in log_file:

current_chunk.append(line)

if len(current_chunk) >= lines_per_chunk:

yield current_chunk

chunk_number += 1

current_chunk = []

# Yield any remaining lines in the last chunk

if current_chunk:

yield current_chunk

# Usage

log_file_path = 'system_logs.log'

for chunk in chunk_log_file(log_file_path):

# Process each chunk with AI analysis

In this code snippet, chunk_log_file is a generator function that yields chunks of the log file, one at a time. The function reads the log file line by line, collects lines in current_chunk, and yields the chunk once it reaches the specified size. Any remaining lines are yielded as the last chunk.

Here’s a Python code snippet to achieve time-window-based chunking

from datetime import datetime, timedelta

from dateutil.parser import parse

from collections import defaultdict

def parse_log_line(line):

timestamp_str, message = line.split(' ', 1)

timestamp = parse(timestamp_str)

return timestamp, message

def chunk_logs_by_time(logs, window_size=timedelta(minutes=5), overlap=timedelta(minutes=5)):

chunks = defaultdict(list)

start_time = None

end_time = None

for log_line in logs:

timestamp, message = parse_log_line(log_line)

if start_time is None or timestamp >= end_time:

# Initialize or slide the window

if start_time is None:

start_time = timestamp

else:

start_time += overlap

end_time = start_time + window_size

# Check and add log to all relevant chunks

current_time = start_time

while current_time <= timestamp < current_time + window_size:

chunk_key = current_time.strftime('%Y-%m-%d %H:%M:%S')

chunks[chunk_key].append(log_line)

current_time += overlap

return chunks

After preparing the chunks, you’ll need a function to analyze them with AI. While the specifics of the AI analysis depend on the use case (e.g., anomaly detection, pattern recognition, root cause analysis), below is a skeleton function to represent this step:

prompt_template """

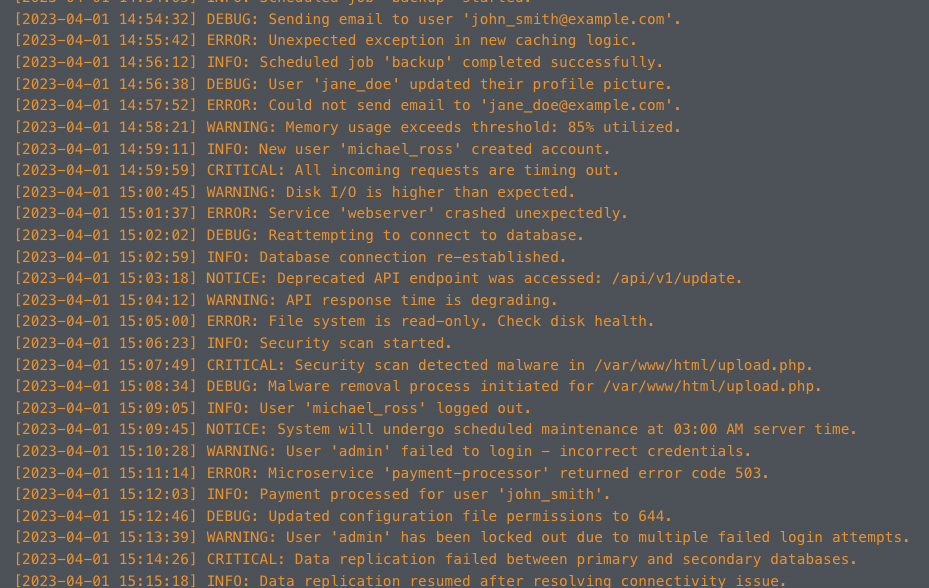

The service started experiencing intermittent failures on April 1, 2023, and eventually stopped responding altogether. Prior to these issues, we deployed a new version of the software, which included database schema updates and new caching logic.

Here are the log entries from the period in question, specifically focusing on the hour leading up to the service outage:

{logs}

Can you analyze the provided logs and determine:

1. What specific events or issues likely led to the service becoming unresponsive?

2. How do the recent changes implemented in the last software deployment correlate with these issues?

3. Are there any patterns or anomalies in the logs that precede the critical failure?

Please provide a summarized analysis of the potential root cause based on the logs. Additionally, what steps would you recommend for further investigating this issue or preventing it in the future?

"""

def analyze_chunk_with_ai(chunk):

# Placeholder for AI analysis logic

# This could involve natural language processing, pattern recognition, etc.

processed_data = ai_model.process(prompt_template.format(logs=chunk))

store_processed_data(processed_data)

Realtime Analysis

Instead of chunking your logs or storing them in a separate datastore, we could simply retrieve them from the central log store software. Logstash for example has an incredibly robust query language that can be leveraged to return a chunk of logs for analysis in realtime. Query by time, level, system, or even similarity search with a log embedding plugin.

Considerations for AI Analysis

Once logs are chunked, they can be fed into an AI system for analysis. However, to be effective, certain considerations must be taken into account:

- Data Consistency: Ensure that chunking does not split related log entries across different chunks, as this could lead to loss of context and negatively impact analysis.

- Feature Extraction: Use natural language processing (NLP) to extract features from unstructured log data. Techniques such as tokenization, entity recognition, and keyword extraction can be applied.

- Anomaly Detection: Apply machine learning algorithms to identify deviations from normal patterns that could indicate security breaches or system issues.

- Scalability: Design the system for scalability by leveraging parallel processing and distributed computing frameworks like Apache Spark or similar technologies.

Conclusion

Chunking system logs is a crucial step in preparing the data for AI analysis. By dividing large volumes of log data into manageable segments, AI models can more effectively process and extract insights from logs. The strategies and code snippets provided in this article are a starting point for implementing a chunking system. Keep in mind the importance of maintaining data consistency, leveraging NLP for feature extraction, and designing for scalability to handle the vast amounts of log data generated by modern IT systems.

As AI continues to evolve and more sophisticated analysis techniques emerge, the processes of chunking and preparation of log data will be essential for harnessing the full potential of AI in system log analysis.

Happy Coding!