Building the Iconic ChatGPT Frontend

OpenAI, an artificial intelligence research lab consisting of the industry’s most talented researchers and engineers, has been pivotal in advancing the field of AI. Among its many contributions, the ChatGPT application stands out as a leading example of how AI can be built into frontend development. Celebrated for a seamless user experience and innovative features, ChatGPT has captured the interest of the tech community worldwide.

To understand what makes the frontend of ChatGPT so iconic, we’ll take a peek behind the curtain at the backend and frontend tech stack, then create a simplified version of ChatGPT with a tutorial.

Frontend Tech Stack

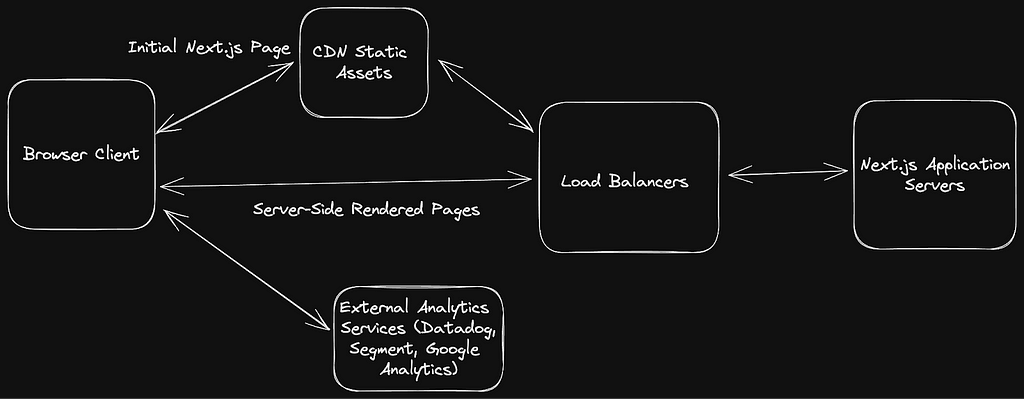

ChatGPT leverages a robust frontend tech stack to deliver its cutting-edge interactive experience. At the core are JavaScript frameworks like React and Next.js, which enable the team to build a highly responsive interface. Through React’s component-based architecture, developers can efficiently manage stateful UI interactions, while Next.js supports Server-Side Rendering that aids in fast page loads even on content-heavy sections.

The choice of CDN with Cloudflare ensures low-latency access to the application worldwide, while security features such as Cloudflare Bot Management and HSTS protect against malicious attacks. Notably, the tech stack also emphasizes performance optimization using tools like Webpack and HTTP/3 to streamline asset delivery.

Other key components of the frontend tech stack include:

- For data handling, libraries such as Lodash and core-js play a vital role by providing utility functions that simplify complex operations.

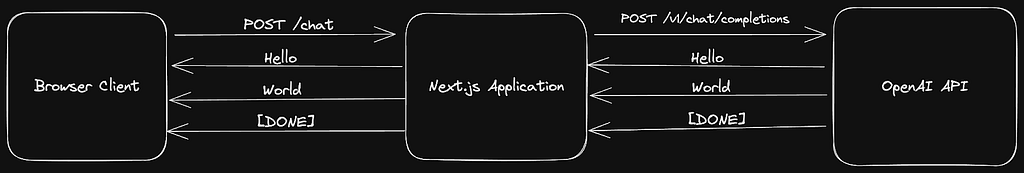

- To complete the ultimate chat experience, Server-Sent Events are used to stream the response as its being generated, simulating the AI typing the answer to you in real-time.

- The integration of analytics tools like Segment, Datadog and Google Analytics allows the team to gather insights on user behavior, which is critical for iterative improvements to the user interface and overall experience.

Let’s Build It

Creating a basic version of ChatGPT’s frontend requires an understanding of its technical layout. Now we’ll embark on a step-by-step journey to replicate a minimalist facet of the iconic application.

Step 1: Environment setup

Start by setting up your development environment. Ensure Node.js and npm are installed, then create a new React application using npx create-next-app@latest chatgpt-clone. Change into the new directory with cd chatgpt-clone.

Step 2: Designing the UI

Create the main chat interface using React components. Structure your components to reflect the iconic layout of the ChatGPT frontend — input area, conversation pane, and submit button. Use CSS or a library like Styled Components for styling.

Create a new file in the pages directory with path pages/index.tsx

import { useState } from "react";

import { chatHandler } from "./chat";

import styles from "./index.module.css";

export default function ChatInterface() {

const [messages, setMessages]: Array<any> = useState([]);

const [input, setInput] = useState("");

const handleSubmit = async (event: any) => {

event.preventDefault();

chatHandler(setMessages, setInput, input);

setInput("");

};

const handleInputChange = (event: any) => {

setInput(event.target.value);

};

return (

<div className={styles["chat-container"]}>

<form onSubmit={handleSubmit}>

<div className={styles["conversation-pane"]}>

{messages.map((message: any, index: number) => (

<div key={index} className={styles["conversation-message"]}>

{message.content}

</div>

))}

</div>

<div className={styles["chat-input"]}>

<input

type="text"

className={styles["input-area"]}

onChange={handleInputChange}

value={input}

/>

<button className={styles["submit-button"]} type="submit">

Submit

</button>

</div>

</form>

</div>

);

}Step 3: Handle streaming message responses

Create a handler function for interacting with our backend in a simple to use interface. This method will take in a prompt and the react set state method for handling updates as they are received.

Create a new library file at the path /src/chat.ts

export default function chatHandler(setMessages: Function, prompt: string) {

// configure message format for openai api

const userMessage = { role: "user", content: prompt };

const aiMessage = { role: "assistant", content: "" };

const msgs = [...messages, userMessage];

// set initial question in the chat log

setMessages(msgs);

// make sse request to the backend

const response = await fetch("/api/chat", {

method: "POST",

headers: {

"Content-Type": "application/json",

},

body: JSON.stringify({

messages: [...messages, userMessage],

}),

});

// process the response from AI as it comes in

if (!response.body) return;

const reader = response.body

.pipeThrough(new TextDecoderStream())

.getReader();

while (true) {

const { value, done } = await reader.read();

if (done) break;

const lines = value

.toString()

.split("\n")

.filter((line) => line.trim() !== "");

for (const line of lines) {

const message = line.replace(/^data: /, "");

aiMessage.content += message;

setMessages([...msgs, aiMessage]);

}

}

}Step 4: Establishing the backend logic

To implement the logic that captures user input and displays responses, you’ll need to configure an API file to handle requests sent to the backend. This API handler establishes an SSE connection with the frontend, makes a streaming request to OpenAI API endpoint, and forwards those response chunks back to the frontend.

Create our backend API handler at the path pages/api/chat.ts

import type { NextApiRequest, NextApiResponse } from "next";

export default async function handler(

req: NextApiRequest,

res: NextApiResponse

) {

res.writeHead(200, {

Connection: "keep-alive",

"Content-Encoding": "none",

"Cache-Control": "no-cache, no-transform",

"Content-Type": "text/event-stream",

});

const body = req.body;

const response = await fetch("https://api.openai.com/v1/chat/completions", {

method: "POST",

headers: {

"Content-Type": "application/json",

Authorization: `Bearer ${process.env.OPENAI_API_KEY}`,

},

body: JSON.stringify({

model: "gpt-3.5-turbo",

messages: body.messages,

stream: true,

}),

});

if (!response.body) return;

const reader = response.body.pipeThrough(new TextDecoderStream()).getReader();

while (true) {

const { value, done } = await reader.read();

if (done) break;

const lines = value

.toString()

.split("\n")

.filter((line) => line.trim() !== "");

for (const line of lines) {

const message = line.replace(/^data: /, "");

if (message === "[DONE]") {

res.end();

return;

}

const jsonValue = JSON.parse(message);

if (jsonValue.choices[0].delta.content) {

res.write(`data: ${jsonValue.choices[0].delta.content}\n\n`);

}

}

}

}Step 5: Putting It all together

With the UI components, backend API, and interaction logic in place, simulate the complete conversation flow in the application. Ensure the app fulfills the basic functionality of receiving user messages and displaying AI responses as they are being generated. Make sure to set your environment variable OPENAI_API_KEY before running the app with npm run dev.

If you do not already have an API key, [visit the OpenAI website](https://platform.openai.com/signup) to create a free account.

Technical Architecture

The process outlined above results in a simple interactive web application reminiscent of the ChatGPT interface. While this version is elementary, it underlines the fundamental aspects of technical architecture that can have profound implications on user experience, conversions, and performance.

For instance, server-side rendering with Next.js can significantly improve SEO and load times, directly influencing user retention. Additionally, security integrations ensure a safe user environment, potentially increasing user trust and engagement metrics.

To take full advantage of the powerful analytic platforms mentioned, a composability system like Canopy can be critical for syncing all your data to a central location. This centralized approach ensures actionable insights are readily available, which can optimize the user journey, inform decision-making, and ultimately lead to enhanced user trust and improved engagement metrics. The Canopy composability platform will speed up development by allowing sync, transformation, and export for all your data sources.

One of the magical experiences users love with ChatGPT is the live-typing feature while the AI is generating the answer to your questions. This is delivered using Server-Sent Events (SSE), but is an excellent strategy for AI transformer model responses. We usually know exactly what data to return based on the request, but with AI the full answer is unknown until the answer completes. Because of the architecture of the model, we can return the answer in chunks as it’s being generated back to the client.

Building reliable streaming applications that pull data from different 3rd-party sources can be a huge challenge for developers. Maintaining integrations, transformations, and handling of the streaming data can require a lot of upfront investment to get running efficiently.

Composability systems like Canopy aim to simplify this process while also accelerating developer productivity. With Canopy, you can pull from multiple sources, transform the response data, and stream the results to your frontend application. The builder UI in Canopy allows for even non-technical users such as pProject mManagers to prepare backend API’s for the frontend developers to utilize in building beautiful frontend experiences.

Conclusion

OpenAI’s ChatGPT stands as a testament to what stellar frontend development can achieve. From its selected tech stack to its user-centric design, every aspect of the application has been purpose-built to create an engaging AI chat experience. As burgeoning developers, we stand to learn a great deal by observing and dissecting such groundbreaking work.

Keen to explore the mentioned techniques and tools further? Embrace the challenge and begin crafting your trailblazing applications today.